Unveiling the Power of Maximum A Posteriori Estimation

Related Articles: Unveiling the Power of Maximum A Posteriori Estimation

Introduction

In this auspicious occasion, we are delighted to delve into the intriguing topic related to Unveiling the Power of Maximum A Posteriori Estimation. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Unveiling the Power of Maximum A Posteriori Estimation

- 2 Introduction

- 3 Unveiling the Power of Maximum A Posteriori Estimation

- 3.1 Understanding the Essence of MAP Estimation

- 3.2 The Mathematical Framework of MAP Estimation

- 3.3 Illustrative Example: Coin Toss Experiment

- 3.4 Advantages of MAP Estimation

- 3.5 Applications of MAP Estimation

- 3.6 FAQs Regarding MAP Estimation

- 3.7 Tips for Effective MAP Estimation

- 3.8 Conclusion

- 4 Closure

Unveiling the Power of Maximum A Posteriori Estimation

In the realm of statistics and machine learning, the quest for optimal solutions often leads to the exploration of various estimation techniques. Among these, maximum a posteriori (MAP) estimation stands out as a powerful tool, particularly in scenarios where prior knowledge about the underlying distribution is available. This article delves into the intricacies of MAP estimation, illuminating its underlying principles, practical applications, and the benefits it offers.

Understanding the Essence of MAP Estimation

At its core, MAP estimation aims to find the most probable value of a parameter given observed data. It leverages a fundamental concept in probability theory: Bayes’ theorem. This theorem states that the posterior probability of an event, given evidence, is proportional to the product of the prior probability of the event and the likelihood of the evidence given the event.

In the context of MAP estimation, the parameter we aim to estimate becomes the "event," and the observed data constitutes the "evidence." The prior probability represents our existing knowledge or beliefs about the parameter, while the likelihood function quantifies the probability of observing the data given a specific parameter value.

The MAP estimator, therefore, seeks to maximize the posterior probability, effectively finding the parameter value that best explains the observed data while incorporating our prior knowledge. This approach contrasts with maximum likelihood estimation (MLE), which solely focuses on maximizing the likelihood function, disregarding any prior information.

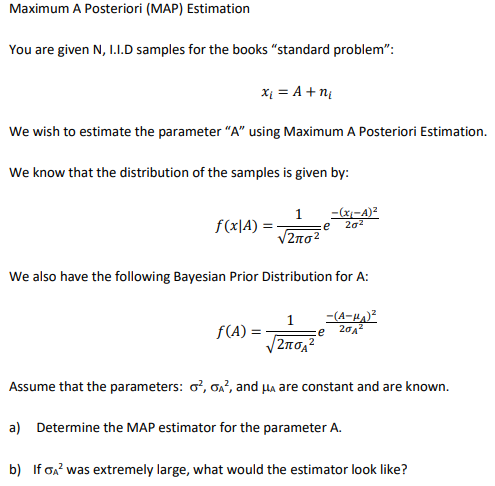

The Mathematical Framework of MAP Estimation

Let’s formalize the concept with mathematical notation. Suppose we have a dataset D consisting of n observations and a parameter θ we wish to estimate. The MAP estimator for θ, denoted as θ_MAP, is obtained by maximizing the posterior probability, P(θ|D):

θ_MAP = argmax(θ) P(θ|D)Applying Bayes’ theorem, we can rewrite the posterior probability as:

P(θ|D) = [P(D|θ) * P(θ)] / P(D)where:

- P(D|θ) represents the likelihood function, quantifying the probability of observing the data given θ.

- P(θ) represents the prior probability of θ.

- P(D) represents the marginal probability of the data, which acts as a normalizing constant.

Since P(D) is independent of θ, maximizing the posterior probability is equivalent to maximizing the numerator:

θ_MAP = argmax(θ) [P(D|θ) * P(θ)]This expression highlights the key components of MAP estimation: the likelihood function, which captures the data’s influence, and the prior distribution, which incorporates our prior knowledge.

Illustrative Example: Coin Toss Experiment

Consider a simple example of a coin toss experiment. We toss the coin 10 times and observe 7 heads. Our goal is to estimate the probability of getting heads (θ).

Using MLE, we would simply choose the value of θ that maximizes the likelihood function, which in this case is θ = 0.7 (7 heads out of 10 tosses).

However, if we have prior knowledge that the coin is slightly biased towards heads, we can incorporate this information through a prior distribution. For instance, we could assume a Beta distribution with parameters α = 2 and β = 1, which reflects a prior belief that the probability of heads is likely to be greater than 0.5.

By employing MAP estimation, we combine the information from the likelihood function and the prior distribution, resulting in a more informed estimate of θ that reflects both the observed data and our prior belief.

Advantages of MAP Estimation

MAP estimation offers several advantages over MLE, particularly in scenarios where prior information is available:

- Regularization: The prior distribution acts as a regularizer, preventing overfitting by penalizing extreme parameter values. This is especially beneficial in cases with limited data or high dimensionality.

- Improved Accuracy: By incorporating prior knowledge, MAP estimation can often lead to more accurate estimates, especially when the data is noisy or sparse.

- Handling Unseen Data: MAP estimation can effectively handle unseen data points by leveraging the prior distribution to make predictions beyond the observed data.

Applications of MAP Estimation

MAP estimation finds wide applications in various fields, including:

- Machine Learning: In classification and regression tasks, MAP estimation is used to estimate model parameters, such as weights and biases, based on training data and prior knowledge about the underlying distribution.

- Computer Vision: MAP estimation is employed in image processing and computer vision tasks, such as object detection and image segmentation, to estimate parameters like object locations and shapes.

- Natural Language Processing: MAP estimation is used in language models to estimate probabilities of word sequences, incorporating prior knowledge about word frequencies and grammatical rules.

- Signal Processing: MAP estimation is used to estimate signals from noisy observations, leveraging prior information about the signal characteristics.

FAQs Regarding MAP Estimation

1. How do I choose an appropriate prior distribution?

The choice of prior distribution depends on the specific problem and the available prior knowledge. Common choices include uniform distributions (representing no prior knowledge), Beta distributions (for probabilities), Gaussian distributions (for continuous variables), and Dirichlet distributions (for categorical distributions).

2. Can MAP estimation be used for continuous parameters?

Yes, MAP estimation is applicable for both discrete and continuous parameters. The choice of prior distribution will depend on the nature of the parameter.

3. What are the limitations of MAP estimation?

MAP estimation can be sensitive to the choice of prior distribution, which can influence the final estimate. Additionally, finding the maximum of the posterior distribution can be computationally challenging, especially for complex models.

4. How does MAP estimation compare to Bayesian inference?

While MAP estimation provides a point estimate for the parameter, Bayesian inference provides a full posterior distribution, allowing for uncertainty quantification and more nuanced analysis.

5. When is MAP estimation preferred over MLE?

MAP estimation is preferred over MLE when prior knowledge is available and can be incorporated into the estimation process, especially in situations with limited data or high dimensionality.

Tips for Effective MAP Estimation

- Careful Prior Selection: Choose a prior distribution that reflects your prior knowledge accurately and is appropriate for the parameter’s nature.

- Regularization: Use appropriate regularization techniques to prevent overfitting and improve the robustness of the estimate.

- Optimization Techniques: Employ efficient optimization algorithms to find the maximum of the posterior distribution, considering computational complexity.

- Model Validation: Validate the chosen model and the resulting estimate using appropriate metrics and cross-validation techniques.

Conclusion

MAP estimation emerges as a powerful tool for parameter estimation, particularly when prior knowledge is available. By combining the insights from the likelihood function and the prior distribution, MAP estimation offers a more informed and robust approach to parameter estimation, leading to improved accuracy and generalization capabilities. Its wide applicability across various domains underscores its significance in tackling complex statistical and machine learning challenges. As we delve deeper into the complexities of data analysis and model building, understanding and utilizing MAP estimation will continue to play a crucial role in extracting meaningful insights and making informed decisions.

Closure

Thus, we hope this article has provided valuable insights into Unveiling the Power of Maximum A Posteriori Estimation. We appreciate your attention to our article. See you in our next article!